Mass Hunting for Leaked Sensitive Documents

Significant portion of crucial data and documents is now stored online as the paper documents are becoming less popular. There are many stories coming every day with detailed information about the leakage of sensitive corporate documents. These documents, originally intended for internal use by employees, investors, or for managing internal business affairs, run the risk of unauthorized disclosure if adequate precautions are not taken. Mistakes can occur quite easily, especially within large companies with thousands of employees, where the sheer volume of personnel makes it challenging to ensure that errors won’t happen. For us bounty hunters, it could be a true gold mine, since those documents could contain some PII or any other sensitive data! In this article, I will cover my own approach, how it is possible to massively hunt for leaked sensitive documents.

Getting the Target domains

As far as we know, there is no correct way to hack. You could also target only one or just a few programs at once in order to find sensitive docs’ leakage. It really depends on case by case and on your hacking style. For this particular example, I will show how I’ve made pretty decent bounties by hunting for leaked documents for all programs at once! To have a list of domains to target on, I usually combine multiple techniques. It does require a bit of manual work, but eventually this work should pay off.

1# Project discovery’s public bug bounty programs

As I mentioned on Mass Hunting S3 Buckets article, I have the one-liner (a bit modified for this case) to collect target domains from project discovery’s public bug bounty programs repo:

curl -s https://raw.githubusercontent.com/projectdiscovery/public-bugbounty-programs/main/chaos-bugbounty-list.json | jq ".[][] | select(.bounty==true) | .domains[]" -r > targets.txt

This bash one-liner will curl a public bug bounty program list, filter programs which include bounty, select only domains from parameters and saves the output into targets.txt file. We will use this file later for our hunt.

2# BBSCOPE tool from sw33tLie

If you want to save time greatly, you could use this tool alone. But keep in mind, that you will probably need to inspect collected data manually. It does cover the domain targets from many major bug bounty platforms:

Hackerone

bbscope h1 -a -u <username> -t <token> -b > bbscope-h1.txt

Bugcrowd

bbscope bc -t <token> -b > bbscope-bc.txt

Intigriti

bbscope it -t <token> -b > bbscope-it.txt

Note: Manually inspect all findings and add them to targets.txt for the domains without wildcards, and to the targets-wildcards.txt — domains with wildcards.

Optional: Arkadiyt’s bounty targets data

There is another repository on GitHub, that could be used to build a target list for all programs. The Arkadaiyt’s bug bounty targets data repository is constantly being updated every 30 minutes! Unfortunately, there is no filter if the domains from this repository is from VDP or BBP (at least for domains.txt and wildcards.txt). If you want to be a good Samaritan, and don’t care this much about getting paid you could follow this step, but keep in mind, that your target list will be filled with VDP targets!

curl -s "https://raw.githubusercontent.com/arkadiyt/bounty-targets-data/main/data/domains.txt" | anew targets.txt

curl -s "https://raw.githubusercontent.com/arkadiyt/bounty-targets-data/main/data/wildcards.txt" | anew target-wildcards.txt

Preparing VPS for Mass Hunting PDF Files

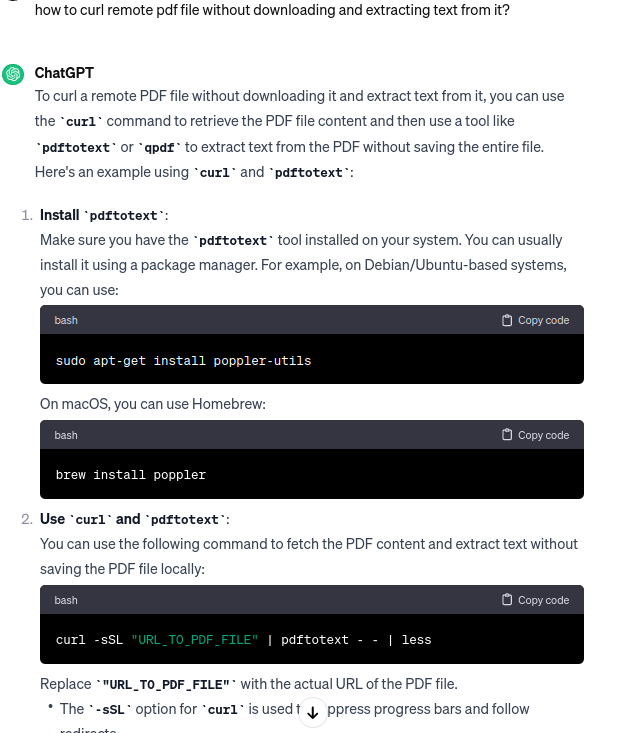

If you follow my stories, you will probably know that I am a big fan of Axiom. I have already covered on how it is possible to back up VPS instance which could be replicated and scaled for bug bounties. To hunt for sensitive PDF files on mass scale, I want to have pdftotext CLI utility on each axiom instance. With this utility, I will be able to convert PDF to text and grep later for sensitive words (potential leaks or PII). For this particular case, the ChatGPT helped me a bit to prepare an axiom instance:

As we can see, to have pdftotext on each instance, I need to use sudo apt-get install poppler-utils command to install on the targeted machine. When installed and backed up, it will be almost ready to use.

Scanning the Targets For Big Bucks

I have also created custom module which include one-liner which collects sensitive data from PDFs with wildcards included:

[{

"command":"for i in `cat input | gau --subs --threads 16 | grep -Ea '\\.pdf' | httpx -silent -mc 200`; do if curl -s \"$i\" | pdftotext -q - - | grep -Eaiq 'internal use only|confidential'; then echo $i | tee output; fi; done",

"ext":"txt"

}]~/.axiom/modules/gau-pdfs.json

Let’s break down what this one-liner does:

- Use gau to gather endpoints, including their subdomains from wayback, urlscan and etc.

- Filter out endpoints by .pdf extension.

- Check if it’s alive with httpx by matching 200 status code.

- For each alive endpoint:

- Curl the endpoint.

- Convert from PDF to text.

- Grep for some sensitive words like internal use only OR confidential.

You can turn on your creative thinking and try to modify this script to your own need. For example, using katana, instead of gau, or checking for other sensitive words, using other extensions and etc. Using own creative approach gives the most bounties!

Finally, use axiom-scan for targets with subdomains:

axiom-scan targets-wildcards.txt -m gau-pdfs -anew pdf-leak-findings.txt

If you want to use it on targets without subdomains included, it is required to modify the module by removing –subs flag:

[{

"command":"for i in `cat input | gau --threads 16 | grep -Ea '\\.pdf' | httpx -silent -mc 200`; do if curl -s \"$i\" | pdftotext -q - - | grep -Eaiq 'internal use only|confidential'; then echo $i | tee output; fi; done",

"ext":"txt"

}]~/.axiom/modules/gau-pdfs.json

And run command for targets without wildcard subdomains:

axiom-scan targets.txt -m gau-pdfs -anew pdf-leak-findings.txt

Final bits

I’ve covered how it’s possible to do bug bounties on a mass scale using a pretty creative approach. You could try to do this for other document types such as doc, docx, xlsx and etc. as it is many other places where some oopsies could occur.

I am active on Twitter, check out some content I post there daily! If you are interested in video content, check my YouTube. Also, if you want to reach me personally, you can visit my Discord server. Cheers!